Stanford University’s Center for Research on Foundation Models, part of its Institute for Human-Centered Artificial Intelligence (Stanford HAI), has published a Foundation Model Transparency Index (FMTI) that rates ten foundation model companies.

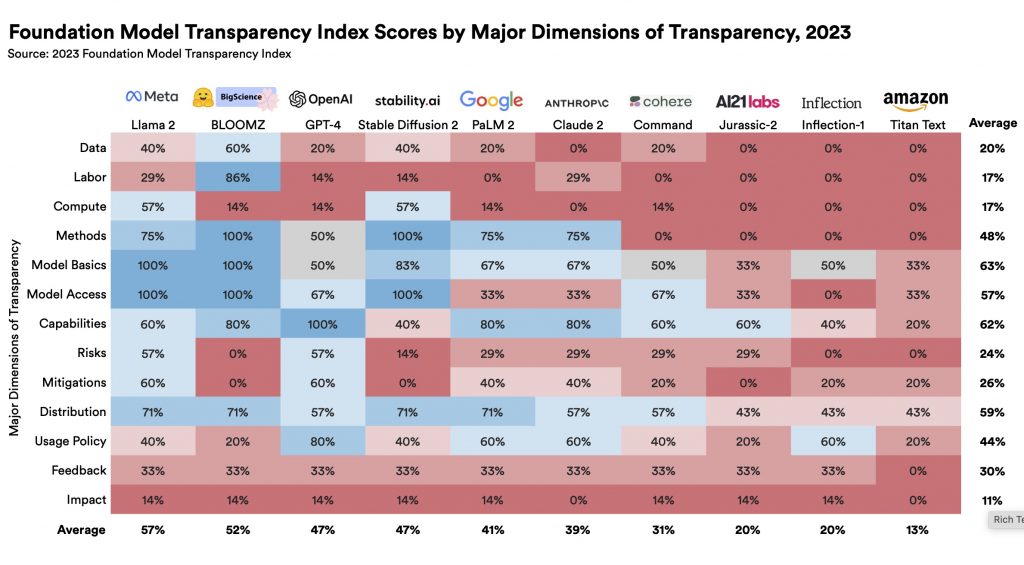

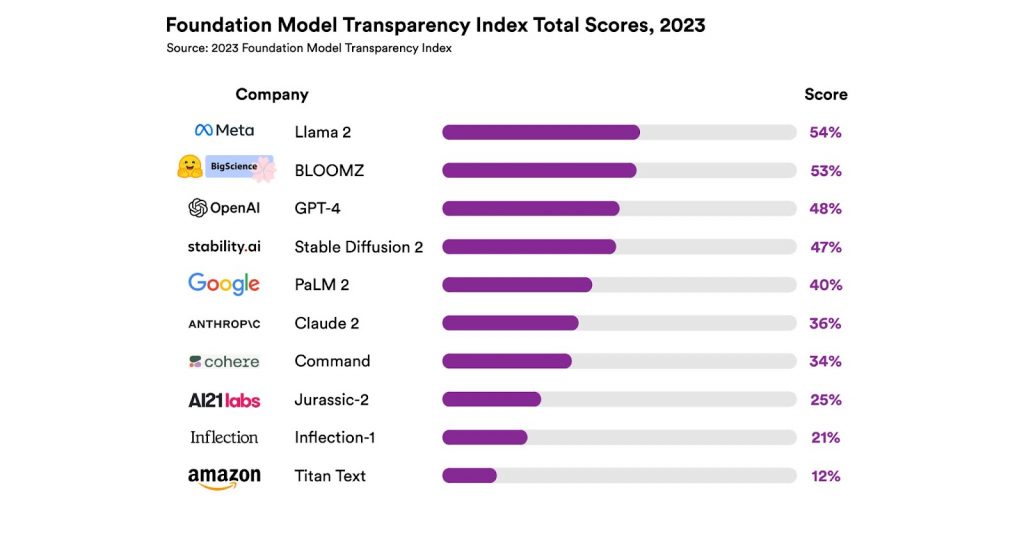

Stanford uses the term ‘Foundation Models’ to refer to models that can be trained “on a huge amount of data, and adapted to many applications.” The Index evaluated 100 different aspects of transparency and scored ten different publicly-available models on a scale from 1 to 100. The scores ranged from 54 down to 12, indicating that they all leave room for improvement.

Data transparency

Data transparency in generative AI models has emerged as a concern for rights-holders. The data attributes evaluated in the model include data license (“For all data used in building the model, is the associated license status disclosed?”), copyrighted data (“For all data used in building the model, is the associated copyright status disclosed?”), data sources, data creators, source selection, data curation, data segmentation, filtration of harmful data, and personal information in data.

Out of the ten models rated, only one of them exceeded a data transparency rating of 40% (Bloomz, at 60%). The others rated 40% or less, with Amazon rating a zero for tranparency.

The study takes the position that the less transparent an AI model is about its characteristics, algorithms, data and its other attributes, the less confidence the user may have in producing meaninful results with it.

Guidance for policymakers

“Another hope is that the FMTI will guide policymakers toward effective regulation of foundation models. “For many policymakers in the EU as well as in the U.S., the U.K., China, Canada, the G7, and a wide range of other governments, transparency is a major policy priority,” said Rishi Bommasani, Society Lead at the Center for Research on Foundation Models (CRFM), within Stanford HAI

Stanford’s Center for Research on Foundation Models is an initiative that spans faculty, students, post doctoral candidates and researchers across 10 departments who share interests in studying and building responsible foundation models.

Further reading

Introducing the Foundation Model Transparency Index. Article. by Katharine Miller. October 18, 2023. Stanford University Human-Centered Artificial Intelligence.

The Foundation Model Transparency Index. Rishi Bommasani, Kevin Klyman, Shayne Longpre, Sayash Kapoor, Nestor Maslej, Betty Xiong, Daniel Zhang, Percy Liang (from Stanford University, Massachusetts Institute of Technology, Princeton University). by Stanford Center for Research on Foundation Models (CRFM), Stanford Institute for Human-Centered Artificial Intelligence (HAI)

Why it matters

While this study does not explicitly call out the potential for AI models to be trained using stolen content or data obtained from fraudulent sources, the fact that none of the ten platforms studied are particularly transparent about their data might raise that concern.